Correlation Doesn’t Mean What You Think It Does

Several months ago at SMX advanced in Seattle, Rand Fishkin of SEOMOZ did a presentation on Google Vs Bing Correlation of Ranking Factors. Since then, I’ve seen these charts and data surface on several SEO blogs, forums, and chat rooms – and every time I see them being used to backup some crackpot theory I cringe.

While I applaud Rand and SEOMOZ for attempting to bring science to the SEO community, sometimes I think they try to stretch that science too far – and this article is one of those cases.

The bit about explaining the difference between correlation and causation is spot on, but unfortunately the rest of the article is completely irrelevant bullshit. There’s nothing wrong with the data or how they collected it, it’s just that none of the data is significant enough to warrant publishing. None of it tells us anything.

The problem here is that most people using this data to support their claims have no idea what correlation coefficients are, let alone how to interpret them. Correlation coefficients range from -1 to 1. If two factors have a coefficient of 0, that means they are in no way related whatsoever. If the coefficient is 1, it means they’re positively correlated. That is to say, as one increases so does the other. For example. The amount of weight I gain is positively correlated to the amount of food I eat. A person’s income is positively correlated to their level of education.

Negative correlation is the opposite. It implies that two things are oppositely related. For example a student’s sick days and grades are negatively correlated – meaning that the more school a child misses the worse his grades are.

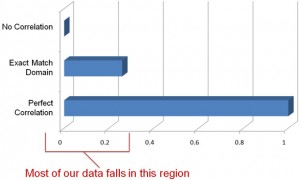

If you were to graph points on an X,Y coordinate system, the graph would look more and more like a straight line as the correlation numbers approach 1 or -1. Here’s a quick graph of some correlation coefficients:

The problem is that the SEOMOZ article (and several SEOs) doesn’t properly interpret the correlation coefficients and what they signify. Let’s take a look:

By SEOMOZ’s own admission, most of their data has a correlation coefficient of about 0.2 – but what does that mean? In scientific terms a 0.2 correlation means that these things aren’t really related at all. That means most of the SEOMOZ data is pretty meaningless.

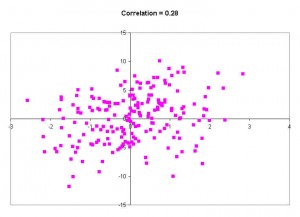

To further illustrate, here’s what a graph of a .28 correlation coefficient looks like. (note, this is a higher correlation that most of the SEOMOZ data)

These numbers are all over the place – there’s no pattern and there’s certainly no trend. I could draw several different trend lines on this data that all tell different stories. In other words, the data isn’t related and can’t be used to prove anything. There’s no real correlation here at all. .28 is too close to zero to be signficiant.

When factors have a correlation coefficient this small it usually means there’s some sort of anecdotal relationship, but nothing worth publishing or reporting. And this is just the random example I picked. When they start talking about ALT attributes having a correlation of 0.04 it just gets crazier. There’s nothing statistically valid to be learned about data with coefficients between 0 and .1.

To be honest, I wouldn’t base any decisions on the data unless it had a correlation coefficient of around .45 or higher.

There’s a few other issues too.

One of the charts mentions that .org domains have better ranking than .com domains. That’s great for sites (like seomoz.org) that have a .org in their name, but is it really true? When you look at the data two things stick out here. First, Wikipedia is a .org and ranks well for many terms. In fact, it ranks so well that it probably should have been considered an outlier and discarded. Second, the data doesn’t include what they call “branded terms” – but a vast majority of .com domains are in fact brands. Having done SEO for several fortune 500 clients I can tell you that big brands mostly target branded terms. That has to make an impact on the data.

And that’s part of the problem in SEO. In addition to collecting the data, we have to sell stuff. Often it’s way too easy to present the data in a way that helps us sell the narrative we want. I’m not out to get SEOMOZ, and I don’t have any problems with them. I actually admire them, because at least they’re collecting data. That’s way more than many others are doing. Collecting data is just the first step though. The real magic happens when you properly apply the data.

Let’s face it. The SEO community isn’t very scientific. We tend to believe anything Matt Cutts, SEOMOZ, or Danny Sullivan say as gospel truth. But should we? Sure they have great reputations but sometimes they make mistakes too. (Remember when several SEOs sold pagerank sculpting services even though now we know it never worked?) I’m not a very religious man so I tend to take everything with a degree of skepticism – and I think that’s the approach more people in the community should use. We need more posts like the SEOMOZ one I linked above, but we also need more people willing to question the data and run tests of their own too. I know we get enough scrutiny from those outside of SEO, but that’s because we don’t get enough scrutiny from those inside the community. Only then will people start taking SEO more seriously.

Disclaimer: The views in this post are mine, and mine alone. I’ve said that a million times before but I just wanted to repeat that here.

7 comments September 17th, 2010