Archive for June, 2010

SEO Isn’t science. It’s not rocket surgery either, but it’s definitely not science.

I’m not sure if having a computer science degree makes me a scientist or not, but I do know how to spot science. See, science has a definition. According to Wikipedia, that definition is: a systematic enterprise of gathering knowledge about the world and organizing and condensing that knowledge into testable laws and theories.

It’s that last bit that causes all sorts of problems for traditional SEO. We SEOs are pretty good at gathering and organizing knowledge. We’re even better when it comes to sharing it, but when it comes to testable laws and theories we kind of suck.

Ever since Branko’s presentation at SMX (which I liveblogged by the way ) I’ve been thinking about testing and the scientific method – and how little of it we actually do in the SEO community. It prompted me to start testing META descriptions with Alan Bleiweiss. Even though we both “knew” what the result was going to be, we did a scientific test anyway – and that’s what the SEO community needs more of.

The problem though, is that in addition to being technologists, SEOs also have to be marketers – and marketing and science don’t really have the best relationship. Marketing tends to be about making claims whereas science is more about collecting and publishing data. As SEO/Marketers we not only have to do the research, but we have to then go in front of a client and convince them why they should spend money to make changes. Often, data alone isn’t enough to sway a client.

A successful SEO is one who is able to wear a science hat and a marketing hat, but a great SEO is one who knows when to wear each one of those hats.

It’s not that SEO can’t be science, it’s just that we’re not doing a good enough job of making it a science – and that’s bad for the industry. Unverifiable claims are one of the quickest ways to get the snake oil reputation. Science is how that nasty reputation can be avoided.

Unfortunately, many SEOs (myself included) get too caught up in the lifestyle to worry about actually doing SEO. Talking about SEO, doing the conference circuit, and living the A-list twitter life are all things that come to those who make SEO claims – but sometimes we get so caught up in that life and making those claims that we forget to actually test our claims.

Sometimes, it’s our own egos that prevent what we do from being called science. “I’ve been doing this for X years, it works, I don’t need to test that, everybody knows it.” How many times have you said something like that? Read through the comments on Alan’s META description test and count the passionate opinions there that are soley based on ego without any data to support them. There’s quite a few.

The true SEO scientist doesn’t just make a claim. He gathers data, then posts that data for others to examine. The problem though, is that posts full of data don’t get retweeted and they don’t get onto the front page of Sphinn. Posts full of claims however do get lots of retweets.

It’s that community aspect of SEO that’s holding us back from achieving our true potential. I can hear a few people muttering under their breath “Oh, he’s just jealous that he’s not an A-lister” and well, that’s true, but it’s not the motivation for this post. I firmly believe that all the A-list SEO people have earned their status. They did awesome work, wrote great blog posts, and did everything else to earn the success they’re enjoying today.

Science and fandom don’t mix.

The problem with reputation though, is that people stop questioning A-listers. They’re no longer required to produce data or backup their claims. While they’ve deserved that right, it’s not good for science. A-listers and SEO rockstars can be wrong too. Nobody’s perfect. Just look at how many advocated pagerank sculpting several months after Google quietly changed how nofollow worked. (then, look at how many refused to admit they were wrong.)

Pay attention next time Danny Sullivan or Lisa Barone write a blog post. Almost instantly they’ll get 10-12 retweets. (and usually, they deserve them too as they write awesome stuff.) The problem here isn’t the retweets, it’s the amount of people who retweet before they actually had time to read the blog post. If retweets are the social equivalent to links on web pages, then so many people are turning their twitter feeds into free-for-all directories. You wouldn’t recommend a doctor that you’ve never visited, why would you recommend a blog post that you haven’t read? What if Danny’s blog had been hacked to include a Viagra post? I bet he’d still get several blind retweets. Stuff like that doesn’t help SEO get taken seriously as a science.

We’ve all heard that the squeaky wheel gets the grease, but in SEO it’s the loudest shouter who gets the attention. Usually, those shouting loud know their shit, but not always. It’s important for SEO that we still continue to scrutinize and think critically about what people are saying – no matter who’s saying it. Everybody makes mistakes.

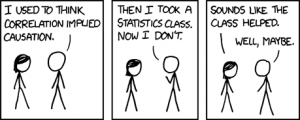

When we’re dealing with over 200 inputs like in SEO, it’s very easy to mix signals, miss relationships, or confuse correlation and causation. Rather than simply making claims, we as an industry need to focus on becoming more scientific. Don’t be afraid to run tests, share your data, and discuss methodologies. You may be right, you may be wrong, but either way you’ll be starting a scientific discussion in which everyone is bound to learn something – and that will make us all better SEOs.

SEO in its current form is not science. It’s starting to be though. Several great people and companies are attempting to put the science back in SEO, and I’m excited to see where our industry can go once more people get on board.

June 24th, 2010

Update: Google’s Maile Ohye has Confirmed my hypothesis that META descriptions do NOT affect relevancy. But please, still read this post to learn about testing SEO.

Last week after SMX, Alan Bleiweiss started running a META Description Test to see whether or not the META description has any affect on search engine rankings in Google.

The article generated lots of comments, and several SEOs (including Jill Whalen) argued that the META description does affect rankings. Somebody even claimed that Google employee Maile Ohye even said so at SES Toronto, but I was unable to verify this claim. For years, my theory has been otherwise.

From my experience, the best way to come up with a hypothesis for an SEO test is to do just that – think of things from Google’s point of view. This is where knowing how to program can greatly benefit an SEO. Ask yourself “if I were coding a search engine, would this make sense? Would it make my results more useful? How could it be spammed? How would I do it? Is it robust? Does it Scale?” You’ll find that answering those questions from an engine’s point of view will almost never steer you wrong with regard to SEO theory.

I didn’t believe that META descriptions affected rank so I decided to join in with a test of my own. We had just finished listening to a panel talk about testing SEO so testing was still fresh in my mind. I also wanted to keep the test as scientific as possible, so here’s what I did:

I created a new page and set the META description to a Unique Phrase that currently had no results for an exact match.

I then changed the META description of an already established site to also include a different unique phrase. The reason I did this is because Jill argued that new pages and old pages may be treated differently – so I didn’t want any bias in my data.

I then planted several links to both of the sites on various blogs, including the test post you see below this one (which will be removed in a few days.)

On the existing page, Google re-visited the page rather quickly and updated its cache. On the new page, it took just about 24 hours. That was several days ago and the spiders have been back several times since then.

After several days of waiting, neither the new page nor the old page rank for the unique phrases contained solely within their META descriptions. You can verify that by clicking the two “unique phrase” links above.

For the sake of completeness, they don’t rank in Bing either.

No matter what I tried, I was not able to make a page rank solely based upon its meta description.

Do some sites appear to rank for the text in their descriptions? Most likely, but we can’t confuse that correlation with causation.

If your site is properly optimized, the terms and phrases contained within your META description will also be the same terms and phrases used both on your site and in links to your site. Several tests have shown that when people see their search terms in titles and descriptions they tend to click through at higher frequencies (just ask your paid search people about this one!) Therefore, it’s a very common strategy to use the same wording in META descriptions as on the site – which then causes people to use that same wording in links.

Also, when it comes to sites who haven’t changed their META descriptions in a while, a funny thing happens. people scrape that content and use it on their spammy sites. Those people even sometimes link that scraped description back to the original site. In that case, it’s very possible that a site would appear to rank for terms contained within the meta description, but it may not actually be because of the meta description.

I’m glad that my test shows what I thought it would show – not simply because I enjoy being right, but because META descriptions are very easy to spam.

In most cases, Google doesn’t actually use the META description you provided. They prefer instead to use a snippet of text taken off of the page. It all goes back to the same “increase clickthrough when your terms appear” idea mentioned above. This means that users very rarely see the meta description you provide. Google has long said that they don’t like to rank sites based on factors that don’t appear to users (see META keywords) as they often invite spam.

So there’s the results – please leave your insights, comments, or observations in the comments. Also, please run your own tests if you feel fit. I’d love to see your results and compare your findings with my own.

June 14th, 2010

If you missed SMX this year, you missed a great conference. I’m really thankful that I had the opportunity to attend, and I’m looking forward to coming back in future years.

I did a lot of live-blogging during the sessions, and lots of tweeting – but I wanted to collect some of the important points here on the page. This is stuff I learned not only from sessions, but from talking to other industry veterans at the bar as well. There’s a few unique insights here that many may not be aware of.

Here’s my summary of important takeaways from SMX:

1.) Page speed is a minor factor in rankings. Unless you have noticeable problems, it’s not worth worrying about for SEO. Worry about your user experience and your SEO will be fine.

2.) If you’re worrying about bounce rates affecting SEO, stop! Worry about lowering that bounce rate because it’s costing you customers. Matt also said that bounce rate is NOT used directly in search rankings.

3.) If you change your screen resolution, Google shows less images in results to accommodate for the smaller screen size.

4.) When it comes to vertical search and trying to rank for videos, ask yourself this: is a video really the point of entry I want my customers to choose? Will it have an effect on my conversions? Ideally, you don’t want a video to show up and push down your web page that already ranks well – unless you’re a video specific niche.

5.) If you can’t get a straight answer out of Matt Cutts, judge by his reactions or whether or not he’s looking up and to the left.

6.) Pagerank DOES decay over 301 redirects. Especially if you have multiple 301 redirects chained together. I asked Matt about this in the bar with an example of a chain of 5 redirects and he said “google says that’s too many redirects.” He also hinted that most of the pagerank from links to the original URLs would be gone.

7.) If you have a 301 redirect or rel=canonical on a page, it will slow the crawl rate at which googlebot fetches your pages. This came directly from Maile. If your site is down for maintenance, use a 500 response to avoid reduced crawl frequency.

8.) When measuring page speed, Google uses the toolbar time and not the googlebot time – so javascript and things like that actually matter. The estimate given in webmaster tools is very close to what the algorithms use.

9.) Marketers talk about claims. Scientists talk about data. Are you an SEO marketer or an SEO scientist? Too many SEOs are focused on making claims rather than letting the data speak for itself. Tip: Test your shit, publish your data, open it up to peer review.

10.) According to Maile Ohye, the goal of Mayday was to make long tail results more useful to users. Remember, searches don’t think of their query as “long tail.” The goal was to remove pages that were technically not spam, but not very useful. We called these pages “weeds.” Many people speculated that this was a response to Mahalo, eHow, and Demand media, but we got an “up and to the left” look out of Matt on this one…

11.) When it comes to paid links and link farms, Google’s first attempt is to take a “scalpel” approach where they just cut those links out of the graph. There’s no use worrying about a competitor damaging your site with links.

12.) Pretty soon Google rich snippets will happen automatically – just make sure you’ve got microformats on your site.

13.) Google has the ability to determine sentiment, but it is NOT used in rankings. Google analytics data is also NOT used in rankings.

14.) Real time search rankings are calculated based on: Author Quality, Site Authority, Trust & Relevancy. In other words, it’s not about how many followers you have, it’s about how many quality followers you have.

15.) To get into bing news, email [email protected] with a request and an rss feed.

16.) Google supports video sitemaps, Bing doesn’t. Both Bing and Google support local listings.

17.) You can disable Bing’s document preview with a meta tag: meta name=msnbot content=nopreview

18.) Bing & Yahoo rankings will be exactly the same, the display and user experience will differ. Sadly, no decision was made on the future of Yahoo Site Explorer.

So there you go. I learned a lot at SMX, not just about search engines and SEO but about the SEO community as a whole. All in all, it was a very interesting time and I hope you’ve learned something from my summary. I’ve learned a few things, and I’ve even come back with a few things that I need to test a bit more. I’ll close this article by mentioning two of my favorite quotes overheard at the event:

“The SEO community is like high school” – Matt Cutts talking to me at the bar.

“Insecure High School loner seeking vampire who sparkles.†– John Lebaron in his Craigslist ad.

June 10th, 2010

The SEO Vets take all comers with Danny, Rae, Bruce Clay, Alex Bennert, Vanessa Fox, Greg Boser, Todd Friesen, and Stephan Spencer is starting soon. I’ve got my seat in the front row and I’ll be liveblogging soon.

screw it ustream: http://ustre.am/iWi6

Phone died during ustream. I’m going to pick up here liveblogging.

Rae has lost her voice. One of the panelists said it’s an early xmas gift for the rest of us.

Bruce and Vanessa are talking about news headlines. Don’t use “giant wave” in the headline if you’re talking about a Tsunami. Bruce says they can’t correct after the fact on CNN – so they have to do it during creation.

Vanessa is talking about crawling again. Whoever asked this question didn’t go to the last architecture session. See my notes below for the answer here, I’m not retyping it.

add &start=990 to google to see the end of the results. &filter=0 will show you omitted results

Don’t worry about bounce rate affecting SEO – worry about bounce rate affecting conversions.

Since Cutts won’t give a straight answer, a good strategy is to ask him questions and judge his physical reaction.

Matt’s taking notes about changing the “omitted results” list’s name to “a list of crap” I like that Idea. “would you like to see a list of crap from this website?”

Many SEOs here don’t believe official Google answers about crawlability. that’s shocking – why would Google lie about how they crawl the web? There’s no motive. Google wants everything crawlable and findable – it gives them a better search engine.

Should you ever stop link building? The answer: if you owned a brick and mortar store would you ever stop trying to get customers?

Nobody admits to buying links, yet lots of people do it. If you get penalized, you have to clean it up and file a re-inclusion request.

Not sure why so much paranoia over linked networks of sites or paid links. You have to go very obsessive to get banned. IN most cases, I don’t think many SEOs have to worry about links in bad places.

Google recently penalized Google Japan for buying links. If Google is willing to penalize themselves, they may penalize you. Google’s really good at finding links.

So what happens if your competitor buys links for your site…. Vanessa says it’s unlikely that you could get penalized in this case as bans usually result for multiple factors.

Don’t buy links, just give out free phones in return for links.

Is facebook and the open graph going to kill Google? No – but should you diversify into social media? Yes.

Rae says she’ll take any links, she doesn’t care where they come from. I’m in the same boat. One of the biggest traffic drivers to NoSlang.com is a nofollowed link on somebody’s site. Sometimes we lose track of why we needed links in the first place.

Don’t go crazy with universal search. Do you really want a video to rank higher than the link to your site where people can buy something? Most videos don’t have a link to go complete a conversion after watching it…those links that ranked do.

Alex Bennert says to email her if WSJ mentions your site but doesn’t link to it. Good to know, they’ve done that to noslang.com in the past.

June 9th, 2010

The live blog of “Build it Better: site architecture for the Advanced SEO” will start momentarily. Vanessa Fox, Adam Audette, Maile Ohye, Lori Ulloa and Brian Ussery will be speaking. Stay tuned here and refresh for my thoughts, insights, & recap.

Vanessa and Maile must have a lot of clout here, as there’s a facebook session going on across the hall and the site architecture room is standing room only rightnow.

Lots of the room has complicated problems – not sure how many are related to search though.

Maile is up first. She’s using Google store. It has 158 products but 380,000 URLs indexed. How does that happen?

First point: protocol and domain case insnsitivity. http://www.example.com and HTTP://WWW.EXAMPLE.COM can be different.

Advocating a consistent url structure to reduce duplication and facilitate accurate indexing. suggestion: keep everything lowercase.

301s and rel=canonical can cause slower crawling. Google crawls 300 and 400 status less than 200s. If your site is down for maintenance use a 500 response code to not reduce crawl times.

Don’t be like the Google store, use standard encodings and &key=value stuff. no crazy stuff in place of key value pairs.

Google crawl prioritization.

Indexing priorities: URLs with updated content, new urls with probability of unique/important content.

Sitemap information (xml here) is used.

Ability to load the site (uptime, load, etc) also comes into play.

To increase Googlebot visits:

strengthen indexing signals above. (links, uniqueness, freshness)

Use the proper response codes.

Keep pages closer to the homepage. Further clicks away = less frequent indexing.

Use standard encodings

Prevent the crawling of unnecessary content.

Improve “long tail content” Be wary that we as webmasters call it long tail, but to users it’s the content they want.

Seek out and destroy duplicate content and use the canonical and 301. Google can find these for you in webmaster tools.

Include Microformats to enhance results with rich snippets. Gives the ability to include reviews, recipes, people, events, etc. Her example is the hrecipes format.

Create video sitemaps/mRSS feed. (only Google supports these currently. BingHOO says they’re working on)

Adam Audette is up now and he’s shilling Vanessa’s book – marketing in the age of google.

First, make the best user experience, then leverage that for SEO. That’s good advice.

He’s using amazon as his example. Talking about the top and left nav and how they make search prominent.

Sweet, they’re giving out jane and robot stickers. Gotta get me one of those.

Google shows less images in search results depending upon the size of your screen resolution.

adding dimensions to your images in addition to alt attributes can help browsers and search engines, and increase speed.

Use .png files if possible. Muchsmaller than.gif or .jpg

use EXIF, Tags, Geo, and what not.

Looks like we’ve killed twitter too.

Things you can test:

1. pages indexed. everybody knows how to do a site: query

2. Canonical (www and non www)

3. in-links using yahoo site explorer. (there was a plea to binghoo to keep this tool live)

4. sitemaps – blah blah blah

5. site speed – I’m so tired of hearing about this. it’s not a big factor at all.

Maile says that Google still doesn’t want search results in their search results. Disallow your search pages. Category pages however, are still very welcome.

Maile also said that Google uses toolbar page load times not googlebot crawl times. Good to know, but still don’t like people obsessing so much over speed.

text -indent -999px is not a safe technique to use instead of alt text.

pubsubhubub is an open protocol that lets you push content to search engines rather than let them try to index you. It’s not yet incorporated into google’s pipeline.

June 9th, 2010

I’ll be live blogging the “so you want to test seo” panel at 10:30 pacific time. Check back here for live updates. This should be a good session.

Actionable, testing, etc. These aren’t words you normally hear when people talk about SEO. So glad to hear them and if you too want to learn more about SEO marketing, you will want to check the PBN Links by Saket Wahi. Several people in the room admit to testing SEO as they are true masters at work. One guy admits that he’s perfect and doesn’t need to test his SEO.

Conrad Saam – director Avvo is speaking now.

He’s talking about statistical sampling and the term “statistically relevant” – I feel this is something that many SEOs fail at.

The average person in this room has 1 breast and 1 testicle. A good example of how averages can be misleading.

He’s now talking about sampling, sample size, variability, and confidence intervals. Also the difference between continuous and binary tests. This is very similar to my college statistics class.

His example of “bad analysis” looks awfully similar to some of the stuff I’ve seen on many SEO blogs. It would have been real easy for him to use a real example from somebody’s blog.

Bad analysis: showing average rank change in google based on control.

Good analysis: Do a type 2 T Test. Excel can do that.

It’s all about the sample size when doing continuous testing

http://abtester.com/calculator – good resource for calculating confidence.

Common mistakes:

Seasonality.

Non representative sample

non bell curve distribution

not isolating variables. This one is huge in SEO as there are over 200 variables considered.

Eww… he’s talking about the google sandbox. Just lost some cred with me, as I don’t believe in a Google sandbox – but it does make his point when testing SEO – that we can’t be 100% sure some changes actually caused the results.

Next up, John Andrews

An seo wants:

to rank better

robustness

avoid penalties and protect competition.

As an agency one wants to actionable data to help make the case why we want that.

Claims: (that need to be tested)

PR sculpting does/doesn’t work.

Title tags should be 165 characters

only the first link on a page counts.

there is no -30 penalty

John says that authors of studies and blogs place more value on the claims and not so much value on the claims. the difference between marketing is that marketers tell stories and make claims – scientists deal with data.

Problems with SEO studies

Remarkable claims get the most attention.

Studies are funded by sponsors who have something to gain.

There’s virtually no peer review.

Success is based on attention not validity.

“citations” are just links – and not as valid as real citations.

Note to self, but a copy of “the manga guide to statistics”

So how can we contribute?

Science is slow boring and not easy.

Most experiments don’t produce significant results

scientists learn by making mistakes

As SEO’s we’re stat checkers. We’re too busying seeing how much we just made and how many visitors we just got to deal with experiments. That’s so true.

Tips: Publish your data without making claims. Be complete and transparent. Say “this is what I did and this is what I saw” and people will email you, cite you, or repeat your experiment. Invite discussion about your test.

A good example of this was Rand’s .org vs .com test where he didn’t account for wikipedia bias and also didn’t alot that most .com domains were brand names (which he excluded)

When it comes to SEO testing, just say what you saw. Let the data tell the story and let others come up with the same analysis that you did. That’s science. Publishing claims is often just a push for attention. Man, that’s so true.

Next up, Jordan LeBaron.

Don’t trust Matt Cutts, test your own shit. Different things work in different situations.

Plan. Execute. Monitor. Share. Maintain Consistency.

Branko Rihtman – a molecular biologist who runs seo-scientist.com

Define question, gather info, form hypothesis, experiment, analyze and interpret data, publish results, retest. That’s the scientific method.

Choose your testing grounds. don’t use real or made up keywords, use nonsensical keywords made up of real words (like translational remedy or bacon polenta)

How to interpret data:

Does the conclusion agree with expectations? Does it have an alternative explanation? Does it agree with other existing data? Bounce the findings off of somebody. Don’t have definite conclusions.

Statistical analysis is hard. get help from somebody who knows statistics. Understand correlation and caustation, understand significance. Don’t rely on average.

Avoid personal bias. Don’t report what you want to see or what you thought you saw, report what you actually saw.

You can learn a lot from buying branko a becks. It’s a known fact that scientists can’t hold their alcohol.

June 9th, 2010

Matt Cutts SMX Keynote starts in 10 minutes. Stay tuned here (and keep refreshing) for live blog coverage.

Firstly, I find it amazing that Matt Cutts has his own set – different than what everybody else uses.

Danny started out with some lifejackets for MayDay and then rejecting the Caffeine free diet coke in favor of some with Caffeine. Cute.

First UP – Caffeine Caffeine is now live! Here’s the official Google announcement. Way back when, Google hadn’t indexed the web in about 4 months. Cue the Google dance – where they’d pull data centers out of rotation and people got different results based on the data center they went to.

Elections, 9/11 reminded Google that QDF, or freshness matters. in 03 they came up with the Fritz update to switch to incremental updates. Now, we have caffeine. Instead of crawling billions of pages, indexing later, then pushing live – caffeine indexes a document immediately after it crawls it.

50% fresher documents. Easier to annotate. Unlocks a ton of flexibility on the Google side.

Mayday Time.

Matt’s team had nothing to do with Mayday. It was an algorithmic change. According to my lunch conversation with Maile Ohye, the goal of Mayday was to make long tail results more useful.

Matt is parroting what Maile said at lunch: How do you look at stuff that’s technically not webspam, but it’s not very high quality either. Maile called these things “weeds.”

If you’re affected by MayDay look at your content and see how you can add usefulness, or unique content.

Danny’s point was right on – hopefully this hurts mahalo or demand media. He didn’t acknowledge it, but it’s clear this could be aimed at that crap Calacanis unleashes on the web daily.

Matt refuses to call out a low quality site, but Danny does: it’s eHow. I agree, those things suck.

Matt says they’re going to look at video sitemaps more. That’s good since Bing doesn’t.

Webmaster tools is adding “crypto 404s” or “soft 404’s” That’s where you return a 200 code but the page itself says “not found.”

Google is looking at improving the cache. Wouldn’t it be great if you could figure out where/how Google pulls your snippet. Danny wants Matt to stop using ODP – however we can all opt of using ODP with a meta tag.

Danny: Google should buy ODP and fix it, then they can be #2 for all results right under wikipedia.

Question Time: (I’m only posting questions that don’t bore me)

Mayday is NOT related to google news at all.

HTML5 is also unrelated to caffeine. Having validated code does Not make you rank higher.

Matt just called out a webmasters tools engineer. He has no idea what type of night he’s in store for from the mobs here.

Google’s approach to paid links is more so just to stop those links from counting.

I’m stealing this from toddmintz: Sculpt abs, not pagerank.

Google has improved it’s discovery of finding links within javascript – in many cases including the spam case – but that doesn’t mean you should use JS links over static HTML links.

Apparently Chrome is very popular in Belgium – either that or they’re sucking up to Google.

Danny Sullivan created Matt Cutts twitter account as a joke then gave it to him. That’s how Matt got on twitter. Matt says buzz is similar to twitter in how we all signed up, didn’t know what it was for, then started using it. I thought that was wave…..

The crowd got a nice laugh out of the term “Many search engines.”

Google is looking at changing rich snippets so that it can happen automagically without webmasters having to apply. This is something I’d really like to see. Timeframe is short, hopefully not months.

Can Google tell if a page is positive or negative? Yes. Do they use sentiment analysis as a signal? Matt doesn’t think so.

Google analytics and bounce rate are not used in general search rankings. Let me repeat: Google doesn’t use bounce rate.

Danny suggests putting Wikipedia in it’s own section separate of rankings and let everybody else rank.

June 8th, 2010

Link Building can be boring. Let’s hope this isn’t about top 10 lists and the same old shit we’ve already heard.

Roger Montti – b2b links are a challenge. Use allintitle: “keyword” to find sites that link to relevant resources.

Backlink Trolling – see who’s linking to your competitors and cherry pick the best ones. You can’t just rely on this technique though. It’ll only make you “as good” as your competitor, not better. Ideally you want to find sites that don’t link to your competition. Work with a reputable link building agency for link acquisition.

linkdomain:relatedsite.com – site:relatedsite.com “sponsors” will find sponsorship opportunities that youre competitors are using.

Did you know that .us sites can’t hide site ownership? Just a thought.

Industry associations are a great way to get links. Spam thought: Creating a fake industry association is a great way to get people to pay you for links!

Paid Links. we all do it, we’re all discrete about it. If you’re not discrete you’re welcoming a reputation management issue.

So far, much of this presentation is the same old crap we’ve been talking about for years. Now I see why Danny Sullivan hates putting together link building panels.

Danny’s Tip: Don’t ask him or Matt Cutts for a paid link.

Ok Arnie Kuenn’s turn now.

Have fun and be creative. Do a little research before you contact somebody asking for a link.

Build a relationship with the site you’re asking for a link on.

Find broken links, email the webmaster and let them know about the broken links while suggesting your own site as a resource to be added.

Hmm. Offer to write a guest post for a blog and offer to promote that post on your twitter account. This works great if you have a good twitter following.

This is the guy behind the “google to start SEO agency” story that made rounds in late April after newsvine didn’t get the april fools joke.

Chris Bennett is up now.

Infographics are cheesy but they seem to work very well for making something boring interesting. This makes sense because I’ve retweeted a few of his graphics in the past without even knowing it.

Offer to “guest viral” content out to other sites.

Gil Reich: Being an authority starts with claiming that you are.

No offense to Gil, he’s really entertaining, but I think I’ve become generally bored with link building in general. This time slot needed something better. Owell.

Stop trying to figure out what people/engines perceive as trusted and authoritative and work on becoming trusted and authoritative.

Debra Mastaler – i don’t know who she is but she likes the queen of england. #random

How can you get even more links out of your content? Recommends Dapper.net also rss mix. Also check out Yoast’s wordpress rss footer plugin. http://yoast.com/wordpress/rss-footer

Don’t chase links, chase the community! That’s solid advice.

You can’t fight wikipedia? Sure you can: I did.

Interesting idea: use chat roulette with a sign saying “link to me.” Not sure I like it, but it’s creative.

Buy old websites that have links. I’ve tried this myself but I’m not a big fan. Purchasing a live site that still exists though does have some legs to it – especially for microsites.

My (ever evolving) Summary: Link building is hard, and most SEOs don’t want to do hard work. That’s why we keep wanting link building sessions, but when it comes down to it the only good methods are the tried and true ones: build quality content. Good content will go viral with very little effort and it helps users.

Thought of the day: We’d get a lot more links by just all linking to each other than by sitting in a room for an hour talking about link building.

June 8th, 2010

Session 1 out of the way. Met Matt Cutts, found some water, and stole a press seat in the front row with a power strip! Seeing how this session is about Real time SEO, I should probably live blog it. What’s more real time than that?

Danny started out by telling us what search is. Thanks Danny. He’s not telling us what search marketing is about. We all know this, and yet strangely most of us gave the same presentation to a cabbie on the way here this morning.

Normal Search, blah blah blah we all know this. No need to summarize here. But what is real time search?

A key difference between normal search and real time search, is that with real time search you know exactly who’s asking. That’s what happens on Twitter. Unlike how Google is many to many, real time search is one to one. You see the tweet, and reply to the tweet.

Danny’s talking about “anyone know” searches. I’ve done a bunch of these in the past – with very little replies. He’s talking about an experiment where he answered questions with links, and actually got thanked!

Yes, Twitter nofollows links, but that nofollow falls off on all the places using the API or scraping twitter. I’ve tested this personally and it’s worked greatly.

Even though it’s called real time search, relevancy is still king. Relevant > Recent. Relevant results can even get more “hang time” in search results.

YourOpenBook.com – plenty of marketing or nepharious potential.

Stew Langille from Mint is taking the podium now. I’ve always been a big fan of Mint – having used it during beta.

Stew is talking about developing strong content for real time SEO. Trending topics, news, financial trends, etc all make strong topics for Mint. Talks about seeing search for “trillion dollars” back during auto bailouts and creating a video – that ranked well in real time results. To me, this is somewhere small agencies can shine, as a large company could never pull off a same time video.

Mint also tailors content specifically to communities like Digg (example: the renter’s manifesto.)

RT @AdrianEden: No matter how well you do something, if you do it in a boring fashion no one cares.

Q&A is a big part of real time search. Mint has developed their own yahoo answers style section. Noticing many sites developing more real estate to Q&A.

Mint uses APIs from google trends, and twitter as well as free tools like Klout.com and Hootsuite (I hate hootsuite) Theory: Build what you can’t buy, the data and APIs are out there.

Danny’s back on the podium. Believe it or not, Google IS a real time search engine. However it’s hard to guage traffic from those links that show up in real time search. Cute: advance internet and John Shehata. They run Mlive.com, a favorite site of mine as well as 25 newspapers that you’ve probably heard of.

Real time ranking factors:

Author Quality + Site Authority / Trust + Relevancy

For many things like Twitter, facebook, myspace, etc traditional ranking signals just don’t exist.

Thinks to consider: User authority, blogging freshness, number of followers, quality of followers, ratio of followers, URL real time resolution. Retweets in the last day, minute, hour.

SEO Things that don’t really apply to real time search: Number of links, domain authority, quality of links, inbound vs outbound links, link neighborhoods.

It’s not about how many followers you have, but how reputable those followers are. @pageoneresults has been screaming this for years.

Try running your account through Twitalyzer to see your influence.

Engagement is NOT a huge factor when it comes to ranking. my note: but don’t discount engagement when it comes to measuring ROI.

OK so how do I get into real time search?

Google has a firehose from twitter. A quick way to check to see if you are included is to go to twitter and search for from:username

Make it easy for users to share your links, tweets, statuses, etc – but don’t overdo it by adding 50 little icons. John recommends limiting it to 5. The easier the better, twitter API, twitter box, etc.

It’s all about encouraging retweets. saying “please retweet” actually works. Also keep content short to leave room for the RT @username stuff.

Connect your social profiles from site to site. I do that here: ryanmjones.com

Monitor hot trends. Spammers do this well – so should you. Do you have a calendar of seasonal search trends that relate to your business? Queries like IRS address happen yearly. People search for movie times on saturdays. Are you prepared?

If you’re not using Google trends, you need to be. Let’s you know all kinds of useful information like who broke the story, the top links, how many mentions, etc.

Things Not To Do:

Don’t spam has tags by putting them all into the same tweet. #smx #mileycyrus #bp #oil

Don’t abuse URL shortening services

Don’t have several twitter accounts on the same IP

Don’t post spammy looking tweets (there goes my whole account)

You can track real time search results in analytics packages by looking for google.com/url?q= in the referer. Nice find!

Hugo Chavez went from associating Twitter with Terror, to hiring 200 people to manage his twitter account calling it a “weapon that needs to be used by the revolution.” Hugo Chavez cracks me up.

Don’t spam the world to create ambient buzz. You need a human behind your twitter stream. It’s all about smart automation. You need to be engaged.

The thing I can’t fathom is that people still tweet asking for cheap hotels and airfare. I always assumed most people already know where to look. Spam idea: tweet keyword relevant questions so that you can answer them with your corporate account?

Instead of creating several twitter accounts, try creating granular twitter lists and promoting those.

Let’s talk about our tools (giggity)

Twitterfeed was mentioned. dlvr.it seems to have a nice feature set. closely.com could be good for small businesses offering specials.

Chris says he knows blackhatters watching google trends and google news to pick hot topics to mention. He’s right, I know several people in this room who do exactly that.

MattMcgee doesn’t like using automated tweet tools. I agree – we can only take so much Guy Kawasaki in our lives. (update: danny made this comment a few minutes after I just did. I’m glad we agree)

Danny Sullivan referred to new style retweet as “blech” The panel seems to be split on their preferred method. I prefer the old style where everybody sees it.

One thing I’d like to see in twitter is topicality over time. How can we know how often Danny Sullivan tweets about pizza? Has he mentioned it the past? We currently don’t know.

My thoughts & summary: There seems to be a fine line between properly using real time search and spamming – and most of it has to do with whether or not your users find you helpful. When it comes down to it, real time search is similar to normal search in that it’s all about finding relevant and useful results that are helpful to users.

June 8th, 2010

SEO for Google vs Bing is about to start here at SMX. Matt Cutts, Rand Fishkin, Sasi and Janet are in the house. Danny just opened with a terrible Sex and the city 2 joke, and now we’re being pitched to. Really, starting with a pitch? #smx #a1a (more spam shit).

Ok here goes. I’ll edit this down to only the crap that seems to matter. I’m leaving out all the basic stuff that would bore most people.

Sadly, Al Gore is not in the room.

Janet Driscoll Miller: Why should we worry about Bing? It’s going to be a 2 engine world soon. Heat maps show that people look at Google and Bing the same. Bing seems to out perform Google on Pages/Visit and Time on Site according to her metrics.

Big supports .xml sitemaps via the sitemaps.org protocol – however unlike Google Bing doesn’t accept video or news sitemaps. It does support sitemap indexes though.

Google has places for local listing, bing has something similar.It’s called bing local listing center but it doesn’t support this chrome browser I’m using.

She’s claiming that site links in Google and Bing only show on the first result, and Matt Cutts perked up. I think he noticed she’s wrong. Here’s an example query: http://www.google.com/search?hl=en&q=fail+pictures&aq=f&aqi=g4g-s1g5&aql=&oq=&gs_rfai=

In Bing, you can’t edit your site links like you can in Google.

Bing News: has no way to submit your site to their news search. You’ll have to email [email protected] with an RSS feed.

Oh Noes! Cash back is going away in July.

Bing has added a “share this” button right into search results for images (example: polar bears. I’m not sure how crazy I am about this. Who shares something before visiting it? Also, no link love provided – the “share this” feature gives you a Bing URL, not the URL of what you’re sharing.

Cutts got a good chuckle out of the fact that Bing video preview only works on Youtube and not on MSN video. Looks like Bing needs a helping of their own dog food. I hear it’s tasty. Here’s Matt’s Take on the session.

To disable document preview in Bing search results add < meta name =”msnbot”, content=”nopreview” >

Or you can add x-robots-tag:nopreview to your robots.txt file.

Danny is talking about making pages for different search engines. Some people admitted to doing this. It’s not something I’d like to admit in a room of SEO’s.

Rand has a slide up about bringing more science to SEO. I’m all for that,but how successful will that be in an era where roughly 50% of SEO’s don’t think that they need to know HTML. You can check out Rand’s slides here.

So far if I could sum up the Bing/Google strategy in one sentence it would be: Just make an awesome, findable site and don’t worry about the differences.

So glad Rand brought up correlation and causation, as this is where many misguided SEO beliefs come from. It’s also the focus of all those video questions I had answered last month.

Keyword Exact match domains have a very high correlation to top ranking in both Bing and Google. Is the domain that much of a factor? Or is it that an exact match domain can really only be about that topic. Also, achor text to a site like “failpictures.com” would probably just say “fail pictures” giving a substantial boost. (my example, not his… I typed this before he spit his out)

All in all, keywords in domains DO matter – as those of us in spammy activities have known for a while!

Interesting: Keywords in sub-domain have very low correlation to ranking in Bing, but much higher in Google. Factor, or related to the high volume of sub-domain spam out there?

Rand is talking about ALT attributes. Where’s Jill Whalen at? 🙂

Rand’s data shows .org has the highest correlation to rankings, and negative correlation to .edu. WTF? Here’s where correlation <> causation comes in – but I’m willing to bet several SEOs sitting in this room are currently registering .org domains. update: looks like this data is completely skewed by wikipedia since it’s a .org.

Rand said some more stuff about links and anchor text, but I missed it while I was hijacking Matt Cutts google buzz page and discussing shitty seos with Janet Miller on Twitter.

Cutts is speaking now.

Matt says Don’t chase search engines, chase the user experience – because that’s the goal of search engines; to chase search experiences. They just have different methodologies of doing so, but the goal is the same.

Matt says Bing shows Wikipedia more than Google – I didn’t know more than 100% was possible.

Sasi says don’t worry about “bing-like” or “google-like”, worry about “what does the user like?” Don’t do anything specific for search engines (I’m sensing a theme here) do it for the user.

Good question for Sasi: What will happen to yahoo site explorer? Sasi says at the end the SEO experience will be good – but no official details here.

What we DO know is that Bing & Yahoo rankings will be exactly the same – just like the old Google AOL deal.

Idea for next time: Bring a loud buzzer for when people speak.

Time’s up, I hope you found this review helpful. Leave me some comment love and check back later for other live blogs from the session – if I can find a power outlet.

June 8th, 2010

Previous Posts